Designing and optimizing ultrasound transducers—whether PMUTs or CMUTs—requires accuracy at scale.

Yet traditional simulation approaches are often constrained to individual cells or limited structures, leaving important array-level effects poorly understood until expensive and time-consuming testing begins.

This gap can lead to longer development cycles and higher risk of failed devices.

In this webinar, we will introduce the improved approach: full array-scale MUT simulations with fully coupled multiphysics.

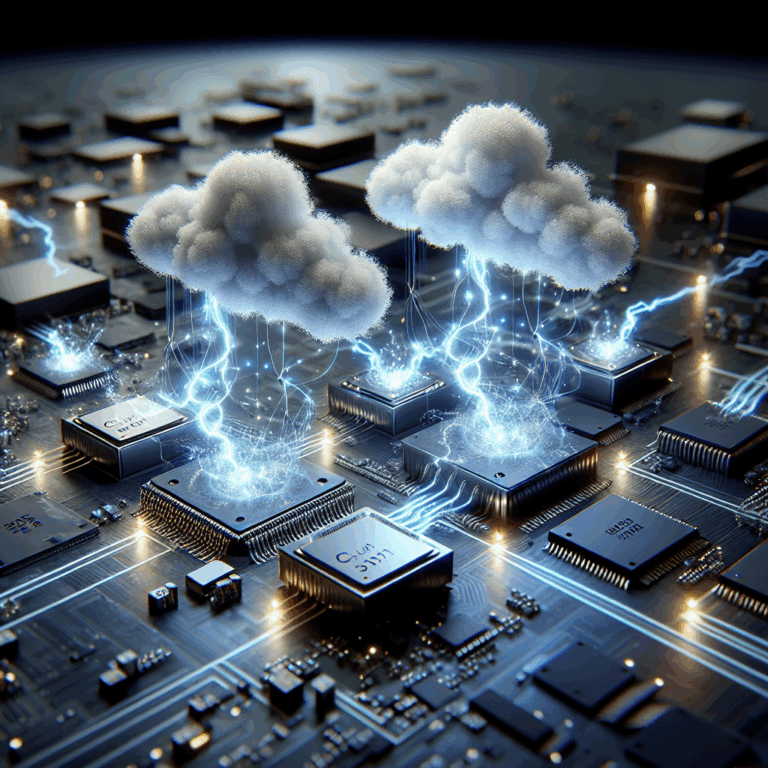

By leveraging Quanscient’s cloud-native platform, engineers can model entire transducer arrays with all relevant physical interactions (electrical, mechanical, acoustic, and more) capturing system-level behaviors such as beam patterns and cross-talk that single-cell simulations miss.

Cloud scalability also enables extensive design exploration.

Through parallelization, users can run Monte Carlo analyses, parameter sweeps, and large-scale models in a fraction of the time, enabling rapid optimization and higher throughput in the design process.

This not only accelerates R&D but ensures more reliable designs before fabrication.

The session will feature real-world case examples with detailed insights of the methodology and key metrics.

Attendees will gain practical understanding of how array-scale simulation can greatly improve MUT design workflows reducing reliance on costly prototypes, minimizing risk, and delivering better device performance.

Join us to learn how array-scale MUT simulations in the cloud can improve MUT design accuracy, efficiency, and reliability.

Register now for this free webinar!