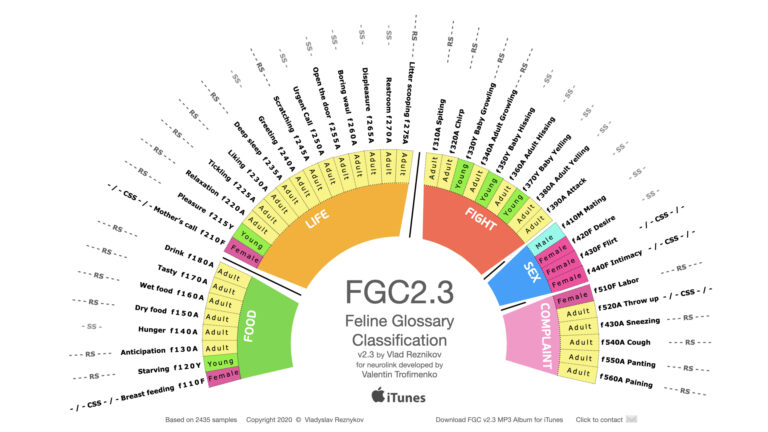

Global Breakthrough: FGC2.3 Feline Vocalization Project Nears Record Reads — Over 14,000 Scientists Engage With Cat-Human Translation Research

MIAMI, FL — The FGC2.3: Feline Vocalization Classification and Cat Translation Project, authored by Dr. Vladislav Reznikov, has crossed a critical scientific milestone — surpassing 14,000 reads on ResearchGate and rapidly climbing toward record-setting levels in the field of animal communication and artificial intelligence. This pioneering work aims to develop the world’s first scientifically grounded…