From the honey in your tea to the blood in your veins, materials all around you have a hidden talent. Some of these substances, when engineered in specific ways, can act as memristors—electrical components that can “remember” past states.

Memristors are often used in chips that both perform computations and store data. They are devices that store data as particular levels of resistance. Today, they are constructed as a thin layer of titanium dioxide or similar dielectric material sandwiched between two metal electrodes. Applying enough voltage to the device causes tiny regions in the dielectric layer—where oxygen atoms are missing—to form filaments that bridge the electrodes or otherwise move in a way that makes the layer more conductive. Reversing the voltage undoes the process. Thus, the process essentially gives the memristor a memory of past electrical activity.

Last month, while exploring the electrical properties of fungi, a group at The Ohio State University found first-hand that some organic memristors have benefits beyond those made with conventional materials. Not only can shiitake act as a memristor, for example, but it may be useful in aerospace or medical applications because the fungus demonstrates high levels of radiation resistance. The project “really mushroomed into something cool,” lead researcher John LaRocco says with a smirk.

Researchers have learned that other unexpected materials may give memristors an edge. They may be more flexible than typical memristors or even biodegradable. Here’s how they’ve made memristors from strange materials, and the potential benefits these odd devices could bring:

Mushrooms

LaRocco and his colleagues were searching for a proxy for brain circuitry to use in electrical stimulation research when they stumbled upon something interesting—shiitake mushrooms are capable of learning in a way that’s similar to memristors.

The group set out to evaluate just how well shiitake can remember electrical states by first cultivating nine samples and curating optimal growing conditions, including feeding them a mix of farro, wheat, and hay.

Once fully matured, the mushrooms were dried and rehydrated to a level that made them moderately conductive. In this state, the fungi’s structure includes conductive pathways that emulate the oxygen vacancies in commercial memristors. The scientists plugged them into circuits and put them through voltage, frequency, and memory tests. The result? Mushroom memristors.

It may smell “kind of funny,” LaRocco says, but shiitake performs surprisingly well when compared to conventional memristors. Around 90 percent of the time, the fungus maintains ideal memristor-like behavior for signals up to 5.85 kilohertz. While traditional materials can function at frequencies orders of magnitude faster, these numbers are notable for biological materials, he says.

What fungi lack in performance, they may make up for in other properties. For one, many mushrooms—including shiitake—are highly resistant to radiation and other environmental dangers. “They’re growing in logs in Fukushima and a lot of very rough parts of the world, so that’s one of the appeals,” LaRocco says.

Shiitake are also an environmentally-friendly option that’s already commercialized. “They’re already cultured in large quantities,” LaRocco explains. “One could simply leverage existing logistics chains” if the industry wanted to commercialize mushroom memristors. The use cases for this product would be niche, he thinks, and would center around the radiation resistance that shiitake boasts. Mushroom GPUs are unlikely, LaRocco says, but he sees potential for aerospace and medical applications.

Honey

In 2022, engineers at Washington State University interested in green electronics set out to study if honey could serve as a good memristor. “Modern electronics generate 50 million tons of e-waste annually, with only about 20 percent recycled,” says Feng Zhao, who led the work and is now at Missouri University of Science and Technology. “Honey offers a biodegradable alternative.”

The researchers first blended commercial honey with water and stored it in a vacuum to remove air bubbles. They then spread the mixture on a piece of copper, baked the whole stack at 90 °C for nine hours to stabilize it, and, finally, capped it with circular copper electrodes on top—completing the honey-based memristor sandwich.

The resulting 2.5-micrometer-thick honey layer acted like oxide dielectric in conventional memristors: a place for conductive pathways to form and dissolve, changing resistance with voltage. In this setup, when voltage is applied, copper filaments extend through the honey.

The honey-based memristor was able to switch from low to high resistance in 500 nanoseconds and back to low in 100 nanoseconds, which is comparable to speeds in some non-food-based memristive materials.

One advantage of honey is that it’s “cheap and widely available, making it an attractive candidate for scalable fabrication,” Zhao says. It’s also “fully biodegradable and dissolves in water, showing zero toxic waste.” In the 2022 paper, though, the researchers note that for a honey-based device to be truly biodegradable, the copper components would need to be replaced with dissolvable metals. They suggest options like magnesium and tungsten, but also write that the performance of memristors made from these metals is still “under investigation.”

Blood

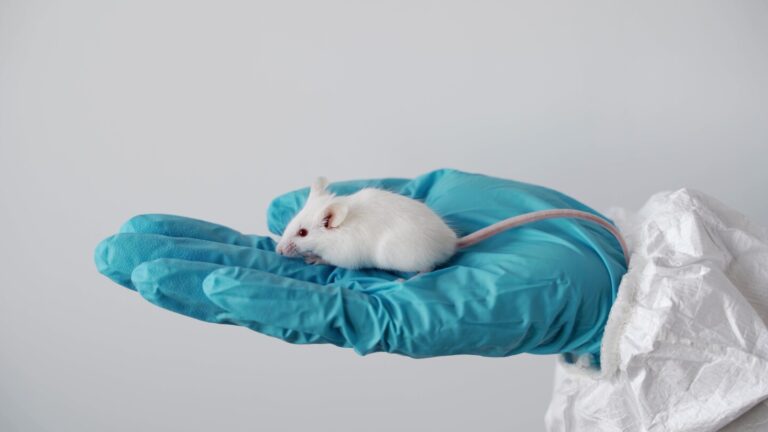

Considering it a potential means of delivering healthcare, a group in India wondered if blood would make a good memristor in 2011, just three years after the first memristor was built.

The experiments were pretty simple. The researchers filled a test tube with fresh, type O+ human blood and inserted two conducting wire probes. The wires were connected with a power supply, creating a complete circuit, and voltages of one, two, and three volts were applied in repeated steps. Then, to test the memristor-qualities of blood as it exists in the human body, the researchers set up a “flow mode” that applied voltage to the blood as it flowed from a tube at up to one drop per second.

The experiments were preliminary and only measured current passing through the blood, but resistance could be set by applying voltage. Crucially, resistance changed by less than 10 percent in the 30 minute period after voltage was applied. In the International Journal of Medical Engineering and Informatics, the scientists wrote that, because of these observations, their contraption “looks like a human blood memristor.”

They suggested that this knowledge could be useful in treating illness. Sick people may have ion imbalances in certain parts of their bodies—instead of prescribing medication, why not employ a circuit component made of human tissue to solve the problem? In recent years, blood-based memristors have been tested by other scientists as means to treat conditions ranging from high blood sugar to nearsightedness.